proSkale Recommends Databricks DQX

Executive Summary

As organizations accelerate AI, analytics, and digital transformation initiatives, data quality has quietly become one of the biggest business risks. Poor data doesn’t just slow reporting – it breaks AI models, erodes trust, increases compliance exposure, and leads to costly decisions.

At proSkale, with 25 years of experience helping enterprises modernize data platforms, we consistently see one pattern:

Most data failures aren’t caused by lack of data – they’re caused by lack of data quality controls built into the pipeline.

This is why we recommend and prefer Databricks DQX as a modern, scalable, and business-aligned data quality framework.

The Business Problem: Why Data Quality Is No Longer Optional

Most organizations believe their data is “good enough” – until:

- AI models produce biased or incorrect recommendations

- Financial reports don’t reconcile

- Regulatory audits uncover inconsistencies

- Executives stop trusting dashboards

The root causes are familiar:

- Manual data entry errors

- Disconnected systems and integrations

- Inconsistent definitions across teams

- Volume and velocity that make manual checks impossible

The cost isn’t technical – it’s business impact.

Why Traditional Data Quality Approaches Fall Short

Historically, data quality was treated as:

- A reactive audit

- A post-processing report

- A manual reconciliation exercise

By the time issues were discovered, data was already:

- Used in reports

- Embedded in AI models

- Shared with regulators or customers

This reactive approach no longer works in real-time, AI-driven enterprises.

Databricks DQX: A Proactive, Embedded Approach to Data Quality

Databricks DQX changes the model entirely.

Instead of checking data after the fact, DQX embeds quality checks directly into data pipelines – ensuring that only trusted, rule-compliant data progresses downstream.

In simple terms:

DQX acts as a quality gatekeeper for your data.

It continuously validates data as it flows through the platform – at scale, in real time.

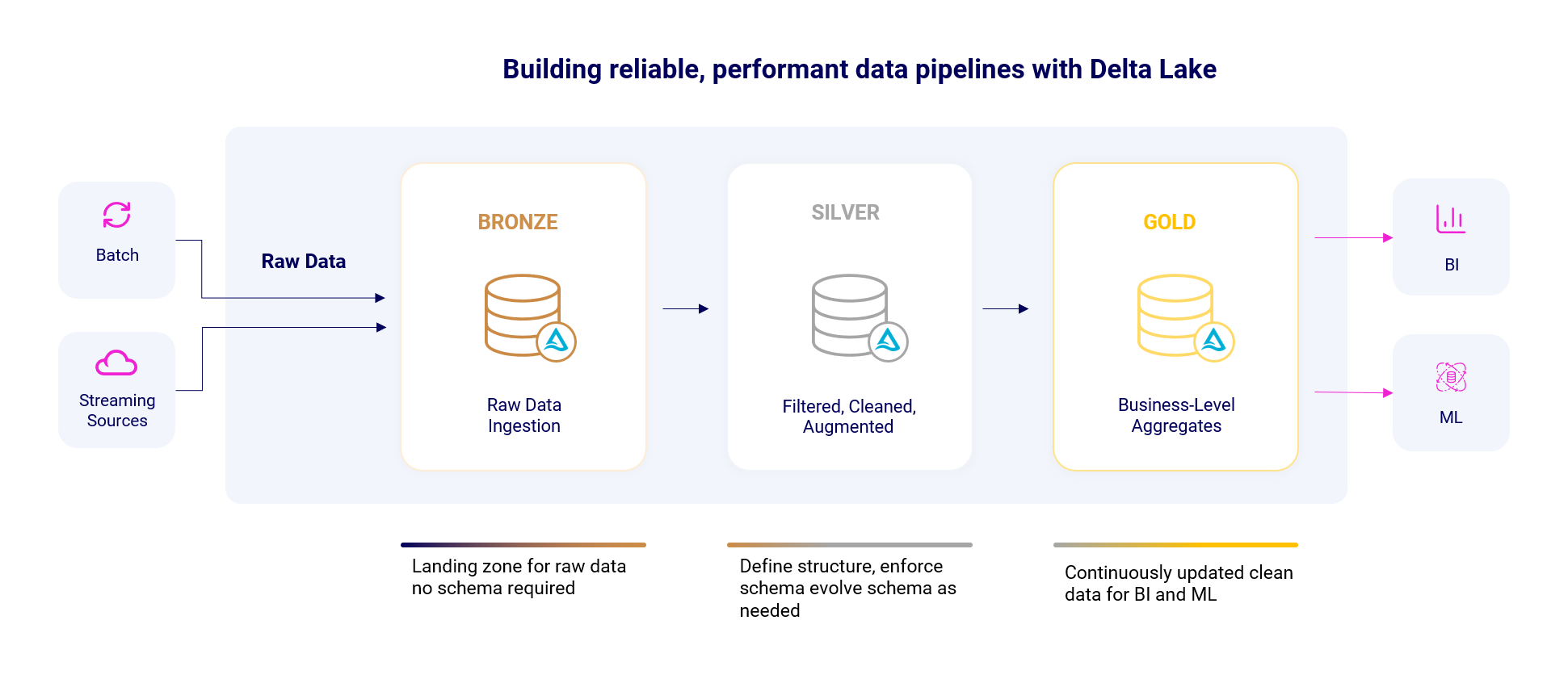

How DQX Fits into the Lakehouse (Bronze → Silver → Gold)

One of the reasons proSkale strongly aligns with DQX is how naturally it integrates into the Lakehouse architecture:

Bronze Layer – Understand the Data Early

- Raw data enters the platform

- DQX profiles structure, patterns, and anomalies

- Quality rules are defined based on real data behavior

Silver Layer – Enforce Quality at Scale

- Rules are actively enforced

- Invalid records are quarantined, not discarded

- Clean records continue forward

Gold Layer – Business-Ready, Trusted Data

- Only high-quality data reaches dashboards, analytics, and AI models

- Business users can trust what they see

This approach ensures quality by design, not by cleanup.

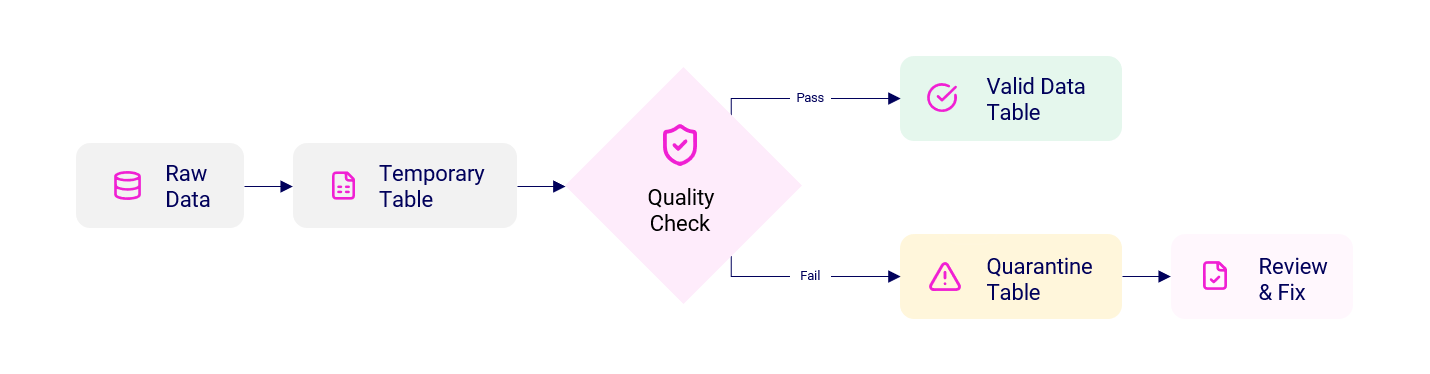

Why Quarantine Matters and Why Business Leaders Should Care

A key strength of DQX and one we emphasize in our consulting engagements is data quarantine.

Instead of deleting bad data or mixing it with good data:

- Invalid records are isolated

- The reason for failure is documented

- Issues can be fixed, audited, and reintroduced

For regulated industries (healthcare, finance, insurance), this is critical for governance and compliance.

What Makes Databricks DQX Enterprise-Ready

From a business perspective, DQX stands out because it:

- Explains why data failed, not just that it failed

- Works across batch and streaming data

- Supports warnings vs. errors (not all issues are equal)

- Operates at row and column level

- Integrates natively with Databricks pipelines

- Scales without introducing operational overhead

In short:

DQX turns data quality from a technical afterthought into a strategic capability.

Why proSkale Recommends and Prefers Databricks DQX

At proSkale, our recommendation is grounded in experience – not theory.

Over 25 years, we’ve helped organizations:

- Modernize legacy data platforms

- Enable AI-driven decisioning

- Meet regulatory and audit requirements

- Restore executive trust in analytics

We prefer DQX because it aligns with how modern businesses actually operate:

- Fast-moving

- Data-driven

- AI-enabled

- Highly regulated

DQX enables:

- Faster time to insight

- Lower operational risk

- Stronger AI governance

- Sustainable data quality at scale

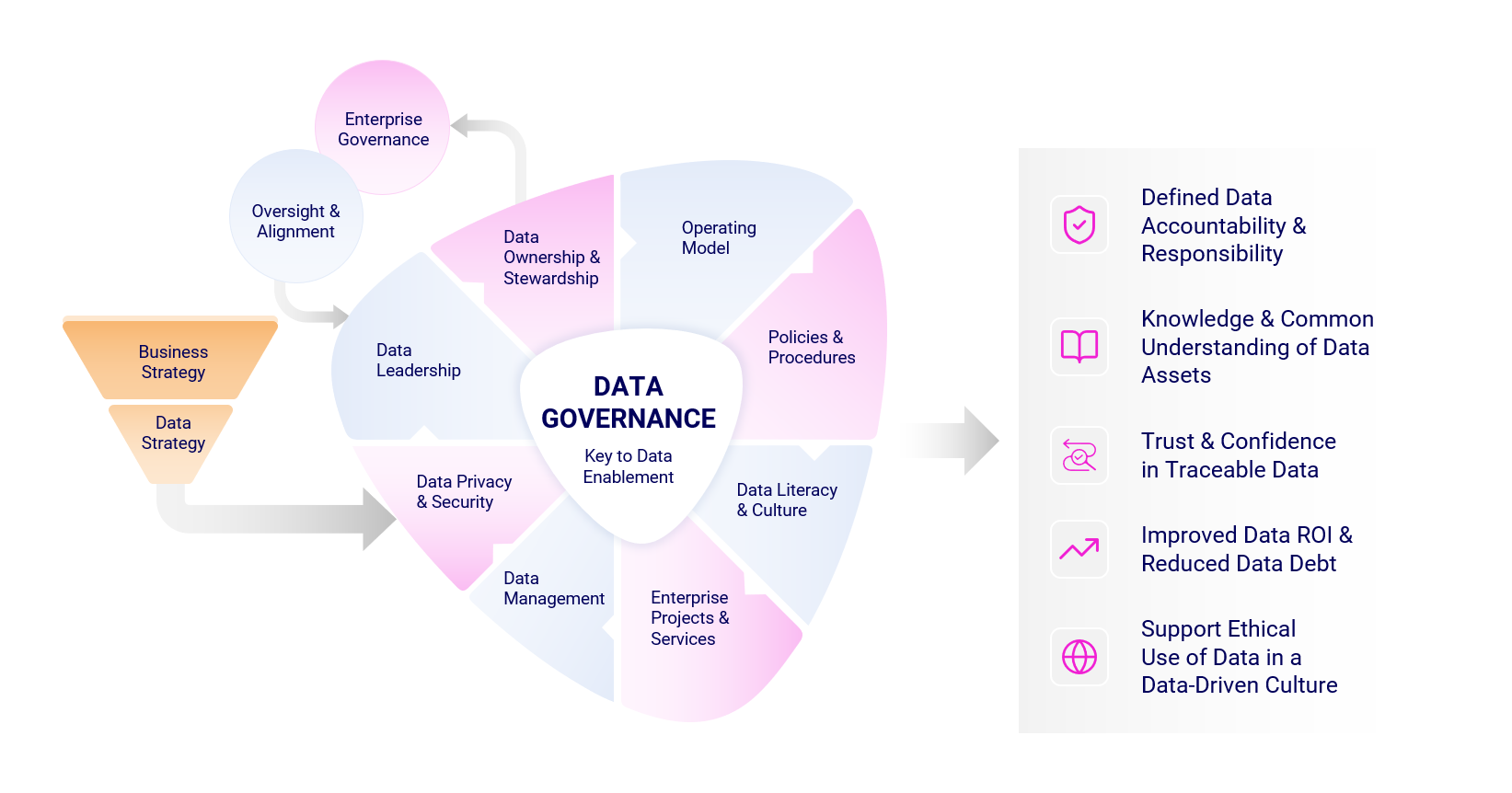

Data Quality Is the Foundation of Trustworthy AI

One of the most important lessons we share with clients is:

Innovation without data quality and AI guardrails isn’t progress — it’s risk.

DQX helps ensure:

- AI models learn from accurate data

- Decisions are explainable and auditable

- Organizations can scale AI responsibly

This makes DQX a foundational component of trustworthy AI strategies.

Final Thoughts

Data quality is no longer a backend concern – it is a business imperative.

Databricks DQX provides a modern, scalable way to:

- Proactively manage data quality

- Protect analytics and AI investments

- Enable confident, data-driven decisions

At proSkale, we don’t just implement tools – we help organizations build resilient data foundations that last.

Want help implementing Databricks DQX the right way?

proSkale partners with enterprises to:

- Design data quality strategies

- Embed DQX into Lakehouse architectures

- Align data quality with business outcomes